So, just a quick post to document an issue we experienced recently regarding service failures on boot, without any errors being logged, on Citrix XenApp servers.

The problem manifested itself, in this instance, on PVS targets running XenApp 6.5, although it can be replicated on other XenApp versions as well (and may well affect XenDesktop too, especially given that it is now the same code base), and doesn’t appear to be tied to anything to do with Provisioning Services. After an overnight scheduled reboot, we noticed that various critical services had stopped on the target devices. The most common ones are listed below:-

- Citrix Independent Management Architecture

- Citrix XTE Service

- User Profile Service

- AppSense User Virtualization Service

- Sophos Antivirus Service

- Network Store Interface Service

Now, I’m sure the more savvy amongst you can probably guess the culprit area straight away, but we didn’t quite grasp the correlation from the off. But one thing that was common to these service failures is that they were all of critical components. If the Network Store Interface Service didn’t start, the Netlogon service would fail, and the PVS target was unable to contact AD. If the Citrix or User Profile services failed, the server would be up but users totally unable to log on and use applications. If AppSense was down, policies and personalization would not be applied. Whatever failed, the net result was disruption to, or failure of, core services.

Another common denominator was the fact that in most cases, there was nothing written to the event logs at all. Occasionally you would see the Network Store Interface Service or the User Profile Service log an error about a timeout being exceeded while starting, but mainly, and almost exclusively for the Citrix and AppSense services, there was literally no error at all. This was very unusual, particularly for the Citrix IMA service, which normally always logs a cryptic error about why it has failed to start. All the other Citrix services could be observed starting up, but this one just didn’t log anything at all.

Now in the best principles of troubleshooting, we were aware we had recently installed the Lakeside SysTrack monitoring agent onto these systems, ironically enough, to work out how we could improve their stability. So the first step we took was to disable the service for this monitoring agent within the vDisk. However, the problems persisted. But if we actually fully uninstalled the Lakeside systems monitoring software, and then resealed the vDisk, everything went back to normal. It appeared clear that the issue lay somewhere within the Lakeside software, although not necessarily within the agent service itself.

Now what should have set us down the right track is the correlation between the Citrix, AppSense, Sophos

and User Profile services – that they all hook processes to achieve what they’re set up for. We needed to look in a particular area of the Registry to see what was being “hooked” into each process as it launched.

The key in question is this one:-

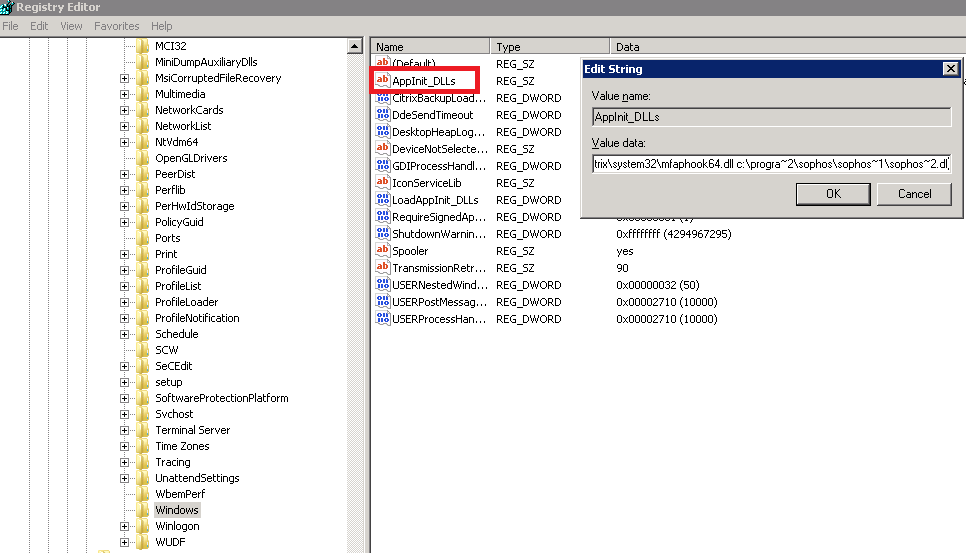

HKLM\Software\Microsoft\Windows NT\CurrentVersion\Windows

And the value is a REG_SZ called AppInit_DLLs

What it does, in a nutshell, is that all the DLLs that are specified in this value are loaded by each Microsoft Windows-based application that is running in the current log on session. Interestingly, Microsoft’s own documentation on this (which is admittedly eleven years old), makes the following statement “we do not recommend that applications use this feature or rely on this feature”. Well, it’s clear that is either wrong or widely ignored, because a lot of applications use this entry to achieve their “hooking” into various Windows processes.

In our instance, we found that the list of applications here contained Sophos, Citrix, AppSense and a few others. But more importantly, the Lakeside agent had added its own entry here, a reference to lsihok64.dll (see the detail from the value below)

lsihok64.dll c:\progra~1\appsense\applic~1\agent\amldra~1.dll c:\progra~2\citrix\system32\mfaphook64.dll c:\progra~2\sophos\sophos~1\sophos~2.dll

Now the Lakeside agent obviously needs a hook to do its business, or at least some of it. It monitors thousands of metrics on an installed endpoint, which is what it’s there for. But it seemed rather obvious that the services we were seeing failures from were also named in this Registry value – and that the presence of the Lakeside agent seemed to be causing some issues. So how can we fix this?

If you remove the entry from here, the Lakeside agent will put it back when it initializes. This is not a problem, but we need it never to be present at restart. There is an option to remove it entirely from within the Lakeside console, but this loses various aspects of the monitoring toolset. So how you approach the fix depends on whether you’re using a technology like PVS or MCS, that restores the system to a “golden” state at every restart, or your XenApp systems are more traditional server types.

If you’re using PVS or other similar technology:-

- Open the master image in Private Mode

- Shut down the Lakeside agent process

- Remove lsihok64.dll from the value for the AppInit_DLLs

- Set the Lakeside agent service to “Delayed Start”, if possible

- Reseal the image and put into Standard Mode

If you’re using a more traditional server:-

- Disable the “application hook” setting from the Lakeside console

- Shut down the Lakeside agent process

- Remove lsihok64.dll from the value for the AppInit_DLLs

- Set the Lakeside agent service to “Delayed Start”, if possible

- Restart the system

There is a caveat to the latter of these – with the “application hook” disabled from the console, you will not see information on application or service hangs, you won’t get detailed logon process information, applications that run for less than 15 seconds will not record data, and 64-bit processes will not appear in the data recorder. For PVS-style systems, because they “reset” at reboot, the agent hook will never be in place at bootup (which is when the problems occur), so you can allow it to re-insert itself after the agent starts and give the full range of metric monitoring.

Also, be very careful when editing the AppInit_DLLs key – we managed to inadvertently fat-finger it and delete the Citrix hook entry in our testing. Which was not amusing for the testers, who lost the ability to run apps in seamless windows!

Once we removed the hook on our systems and set the Lakeside service to “Delayed Start” (so that the Citrix, AppSense and Sophos services were all fully started before the hook was re-inserted), we got clean restarts of the servers every time. So, if you’re using Lakeside Systrack for monitoring and you are seeing unexplained service failures, either removing this Registry hook from the Lakeside console or directly from regedit.exe and then delaying the service start should sort you out.

Update – there is actually a second hook that exists within the Registry that deals specifically with 32-bit processes on 64-bit platforms. You may also need to remove the hook reference from here as well, the value is

HKLM\Software\Wow6432Node\Microsoft\Windows NT\CurrentVersion\Windows\AppInit_DLLs