Let’s talk about Deployment Groups. In all the places that I go to it strikes me that the Deployment Group structure has, on many occasions, not been thought through sufficiently. What you end up with is a huge clump of Deployment Groups thrown together under many different names – Bob’s Test Group, Windows 7 x86 Pre-Pilot Integration Group, NewGroup42, etc. – and straight away another part of your AppSense infrastructure is struck down by the familiar curse. The curse being, the console looks a mess, there’s no order or structure to any of it, rules and configurations are conflicting with each other, nothing works as expected, understanding the purpose and flow of the structure is nigh-on impossible, and trying to make sense of it and bring it back to some semblance of normality becomes a project in itself.

It’s a common thing, and what really gets me hot under the collar is hearing people blame the software itself for the fact that they’ve deployed it in such a haphazard fashion! Admittedly, there are things that could be done better – it never fails to annoy me when I see things like machines sitting in multiple groups and non-existent OUs referenced in membership rules, and there’s no warning in the console to tell you about these sorts of errors – but the limitations of the console aside, I don’t think there’s really any reason not to at least try and put the effort in to make the deployment easier to understand.

There’s not really any right or wrong way to set up your Deployment Group structure – think the way you would structure an AD/GP environment, it can be done in various different ways – but there are some common mistakes you may want to avoid.

To use the Management Console or not?

Maybe now is a good time to mention that there is a school of thought that believes the Management Console should be used only for the deployment of configurations, and everything else in there should be controlled in other ways. Basically that would mean your alerts would be handled by some centralized monitoring tool – maybe System Center Operations Manager for your high-end customers and probably a bit of creative PowerShell scripting for small environments – and deployment of agents would be done by a tool such as System Center Configuration Manager, leaving only the deployment of configurations to actually be handled by the Management Console itself. You could go one step further with this also and deliver the configurations through SCCM itself as well, should you wish to, leaving the Management Console as a tool for infrastructure overview alone. However, a lot of places don’t have the kind of investment in System Center to be able to take advantage of a tool like SCCM – although if you do, I definitely recommend deploying your agents with it – but even if you offload the normal Management Console tasks to a different technology, you will still want to set up the Deployment Groups in as tidy and logical fashion as is humanly possible.

Considerations around Deployment Groups

There are lots of things to sit and think about before you start organizing your Deployment Groups. This is the part that most people fail to do!

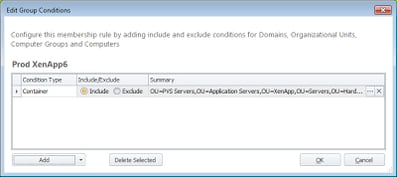

Membership Rules

Membership Rules for your Deployment Groups can be configured either by computer name, or dynamically by using an Active Directory OU or Computer Group. In the interests of keeping everything tidy – think the same way you would populate Worker Groups under Citrix XenApp 6.x – it’s much better, in my opinion, to use the dynamic option, preferably from an OU. As a computer can only be a member of one OU at a time, using this option exclusively means that you can’t inadvertently add a system into two different Deployment Groups. It also means you can deploy the agents and configurations from a Deployment Group simply by dropping a system into the correct OU, cutting down on administrative tasks.

|

| Group Conditions showing a Deployment Group populated by Active Directory OU |

Geographical delineations

Some people think you should separate Deployment Groups by location. Is this a good idea?

Normally the considerations to do with location revolve around traffic. You don’t want Personalization data being copied across the WAN or users connecting to a Management Server on the other side of the world. But these processes are controlled in other ways besides Deployment Groups – Personalization Servers should be assigned via GPO (ideally) or controlled by the sites list, and Management Servers can be load balanced in a number of ways. The only Deployment Group-based consideration that revolves around location is the Failover Servers list, but this would assume all the Deployment Groups are using the same primary. Realistically, geographical delineations should dictate the presence of additional Management Servers and Personalization Servers, rather than Deployment Groups within a Management Server.

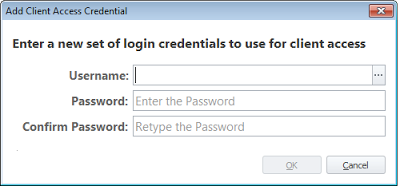

Access Credentials

If you need to supply different sets of credentials for different groups of endpoints, then you will need separate Deployment Groups for these. This should really only be used across multiple forests or domains. In most cases, global Client Access Credentials should suffice and there should be no reason to separate machines into Deployment Groups based around this requirement.

Configurations

It’s natural to think longest about configurations when you start considering what is required around Deployment Groups. After all, each Deployment Group can only have one configuration assigned (well, one each for Environment Manager, Application Manager and Performance Manager respectively). If your devices or operating systems will need different configurations, then you’ll need to divide them into Deployment Groups, right?

However, let’s think carefully about EM in particular. One of the biggest selling points of EM is the broad granularity and huge spectrum of configurable Conditions. You can easily put together a single configuration that covers VDI/non-VDI, different operating systems, CPU architectures, SBC/non-SBC – the list goes on and on. From a purely Environment Manager standpoint, you don’t necessarily need to separate your devices based around the configurations.

Performance Manager and Application Manager, however, are slightly different beasts, in that they don’t have the breadth of Conditions available within them. Performance Manager in particular, if you use it, will at the very least require you to separate the SBC (RDS or XenApp) systems into a specific Deployment Group, as the Performance Manager settings for this type of endpoint will be detrimental to standard laptop or desktop endpoints. Application Manager can be controlled in a fairly granular fashion through the use of Group Rules, so you may be able to use a single configuration across multiple sets of systems – but if anything device- or OS-specific is needed within it, you will have to engage Deployment Groups.

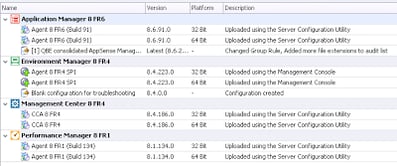

The idea of using a single – monolithic, if you please – configuration across a broad scope of endpoints is to avoid another of the common issues plaguing AppSense. When you have multiple Deployment Groups with different configurations that use some identical settings, when one of these settings is updated in one configuration, it also has to be updated in all of the others. If one of the configurations is missed, straight away you are going to have a problem. Also, the configurations may start to diverge from each other as they are updated individually, causing inconsistency in the application of settings. The layering and merging available in AppSense 8 FR 4 and up can mitigate against some of these issues, but I always feel better using a single Environment Manager configuration (and Application Manager, if possible). Performance Manager is not so much of a problem, given that the settings in PM are generally far less complicated than those in the other two parts of the DesktopNow suite.

Deployment Settings / Functional Environments

Deployment Settings – computer/upload poll periods, and installation schedules – are set on a Deployment Group basis. Therefore, if you have groups of systems that require different deployment settings, you will need to separate them into Deployment Groups.

This leads us on quite nicely to what I term “functional environments”. Different functional environments are usually what necessitate different deployment settings. You might have several functional environments – Production, Pre-Production, Test, etc. For instance, in Test, agents and configurations might be deployed immediately, but in Production, they might not be deployed at all and may be done through a tool such as SCCM under strict change control. In my opinion, functional environments are the main use case for Deployment Groups, as they will require different deployment settings and, at various times, different configurations may need to be applied.

One point that it might be useful to make here is that the Test environment probably needs to be as tightly controlled as Production. Why? Because unless Test mirrors the Production environment as closely as possible, it’s never going to be an adequate testing area! I generally allow people to apply updated configurations to Test – but as soon as they have verified (or not) that the changes they made indeed work, the Test environment is rolled back to the same configuration as Production. When a change that has been verified in Test is applied to the live Production environment, then – and only then – is the updated configuration also applied to the Test environment.

With this in mind, I generally maintain a “Development” or “Sandbox” functional environment in addition to Test. The Development area can be altered with impunity, and is merely used for experimentation prior to running changes through Test.

Delegation of privilege

Yet another reason why you might choose to separate systems into Deployment Groups is for management and delegation purposes. As we discussed in a previous post, security in AppSense DesktopNow can be set on a server- or object-based model.

If you want certain sets of devices to be managed by certain groups of users but not others, you can set security on the Deployment Groups themselves. This allows users to log in to the Management Console and manage only the devices you have provided them access to.

It’s worth mentioning here, however, if different Deployment Groups share a single configuration, then adding users or groups to the Deployment Group security permissions won’t allow them to get at the configuration. A configuration is treated as a separate object inside AppSense DesktopNow, so you will need to delegate privilege for the assigned configurations (and agents, if necessary) as well as the Deployment Group itself.

Auditing

The final reason you might find for Deployment Group usage is for auditing. Enterprise auditing settings can be configured on a per-Deployment Group basis, so if you need to configure heavy auditing for one set of systems and none for some others, this would be the way to achieve it.

Auditing settings would probably tie in to the functional environments, in most cases.

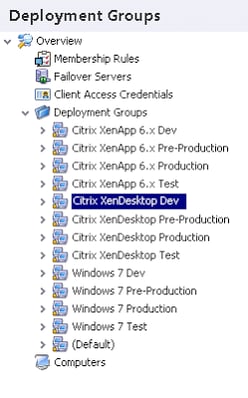

An example

Naturally, every environment will be different, but here’s a quick example of a Deployment Group structure.

Each Deployment Group is populated from an Active Directory OU.

In the main it is divided into Functional Environments – Dev, Test, Pre-Production and Production. Each functional environment has different Deployment Settings and Enterprise Auditing. Each functional environment also has it’s own configuration – but the configurations are all identical apart from those in Dev.

From there it is also divided into three main areas – XenApp, XenDesktop and Windows 7. The reason for this separation is that different Performance Manager configurations will be applied to each type, therefore it has been subdivided by configuration. The Environment Manager and Application Manager configurations are shared.

The Test, Pre-Production and Production configurations for each device type are the same, and are updated together only under change control. Small changes can be made to Test and Pre-Production but these are always rolled back to the current release version afterwards.

Summary

If you put the required amount of thought into your Deployment Group structure, hopefully you should always have a very clean and logical layout in the console. At a lot of places I spend a lot of time clearing up redundant entries and groups from the Management Console, and it’s nothing but an embuggerance to have to deal with these problems.

However, do bear in mind that there are no hard-and-fast rules to setting this up – every environment will be different. Your aim, coupled with the guidelines above, should be to make the structure as clean and simple as possible, so that it is easy to administer, adapt and support.